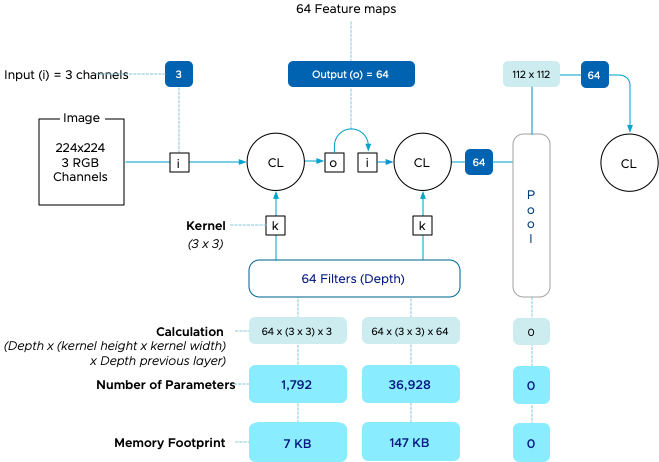

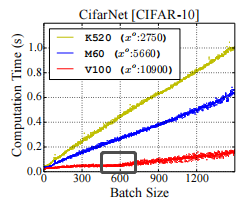

Figure 11 from Layer-Centric Memory Reuse and Data Migration for Extreme-Scale Deep Learning on Many-Core Architectures | Semantic Scholar

How to determine the largest batch size of a given model saturating the GPU? - deployment - PyTorch Forums

Effect of the batch size with the BIG model. All trained on a single GPU. | Download Scientific Diagram

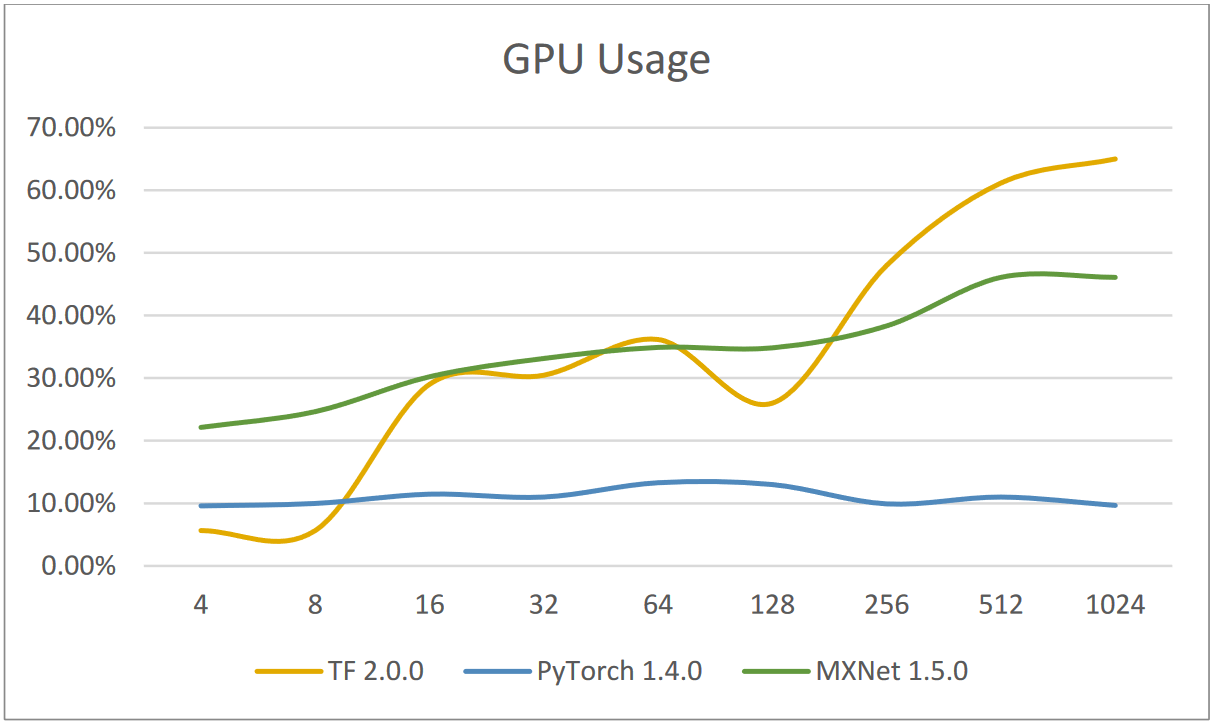

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs 32GB -- 1080Ti vs Titan V vs GV100 | Puget Systems

deep learning - Effect of batch size and number of GPUs on model accuracy - Artificial Intelligence Stack Exchange

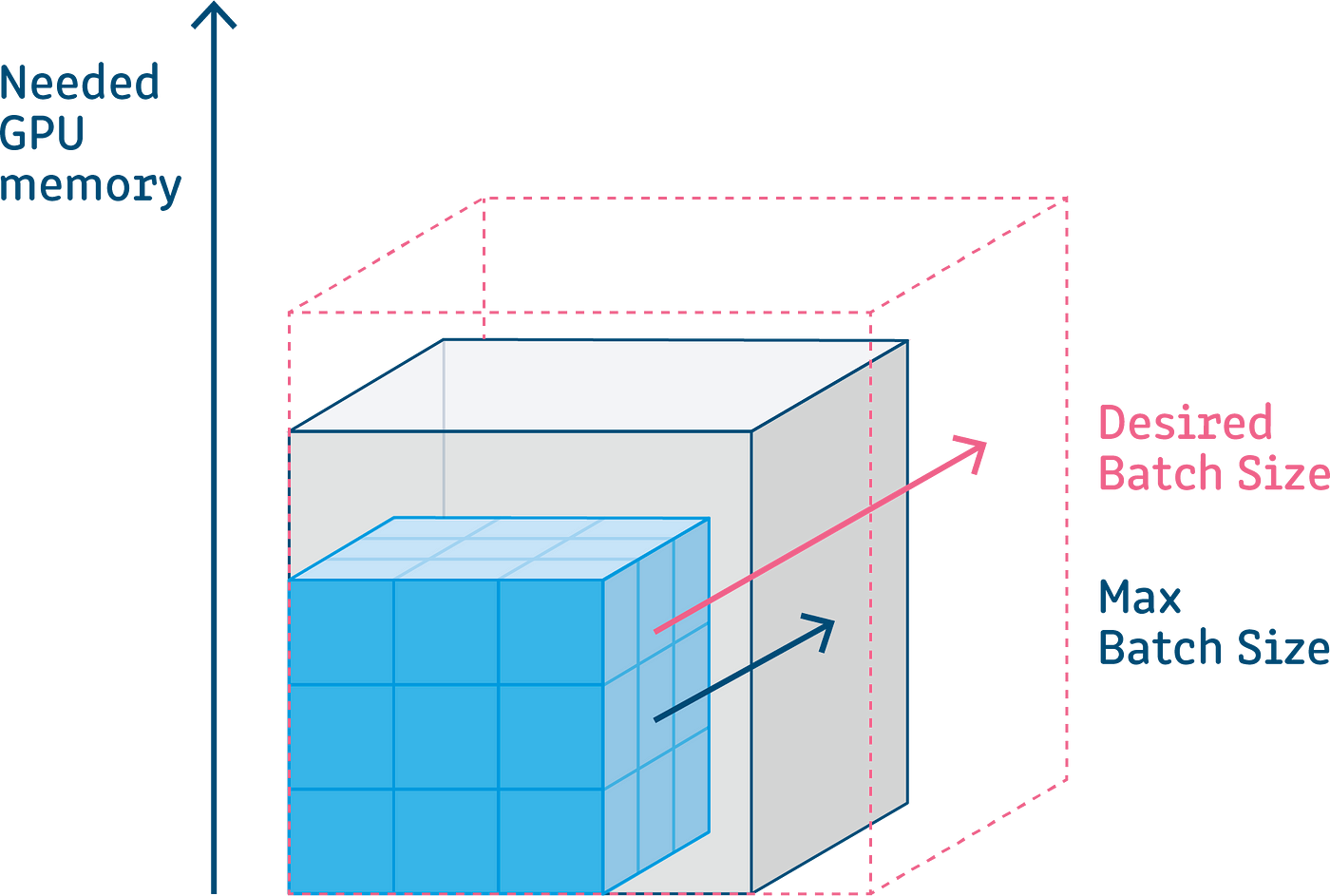

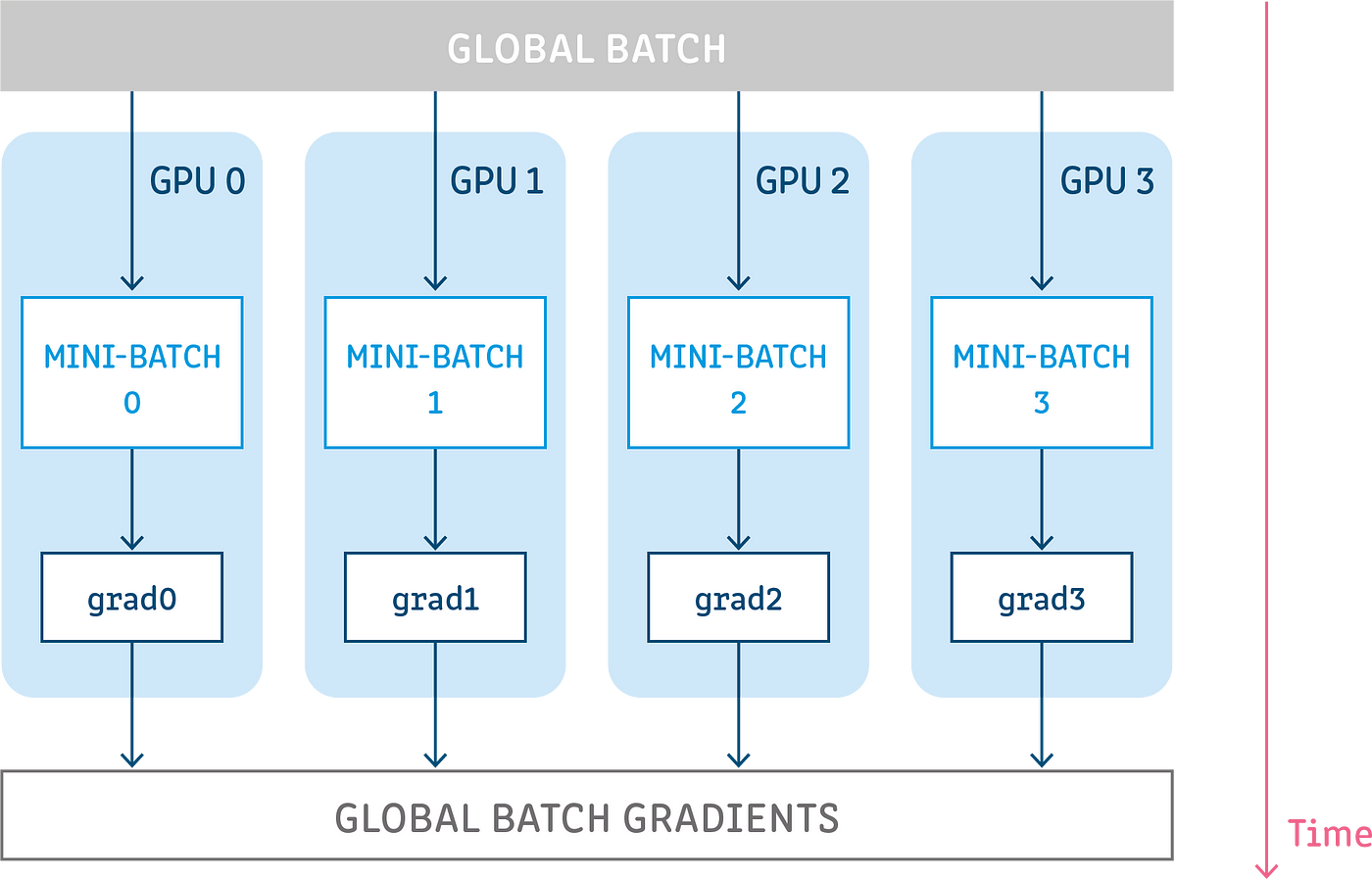

Relationship between batch size and GPU memory - Generative AI with Large Language Models - DeepLearning.AI

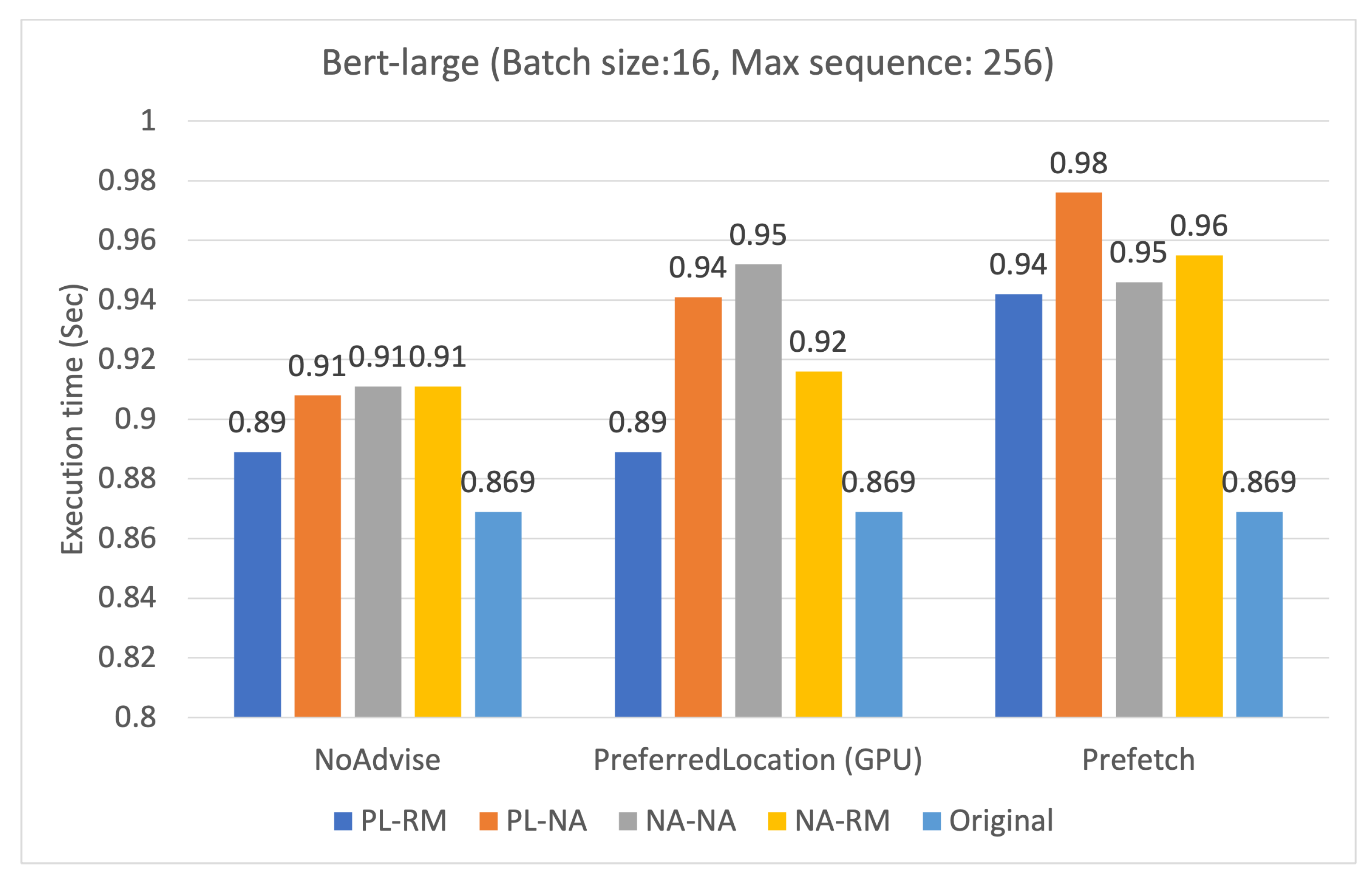

Applied Sciences | Free Full-Text | Efficient Use of GPU Memory for Large-Scale Deep Learning Model Training

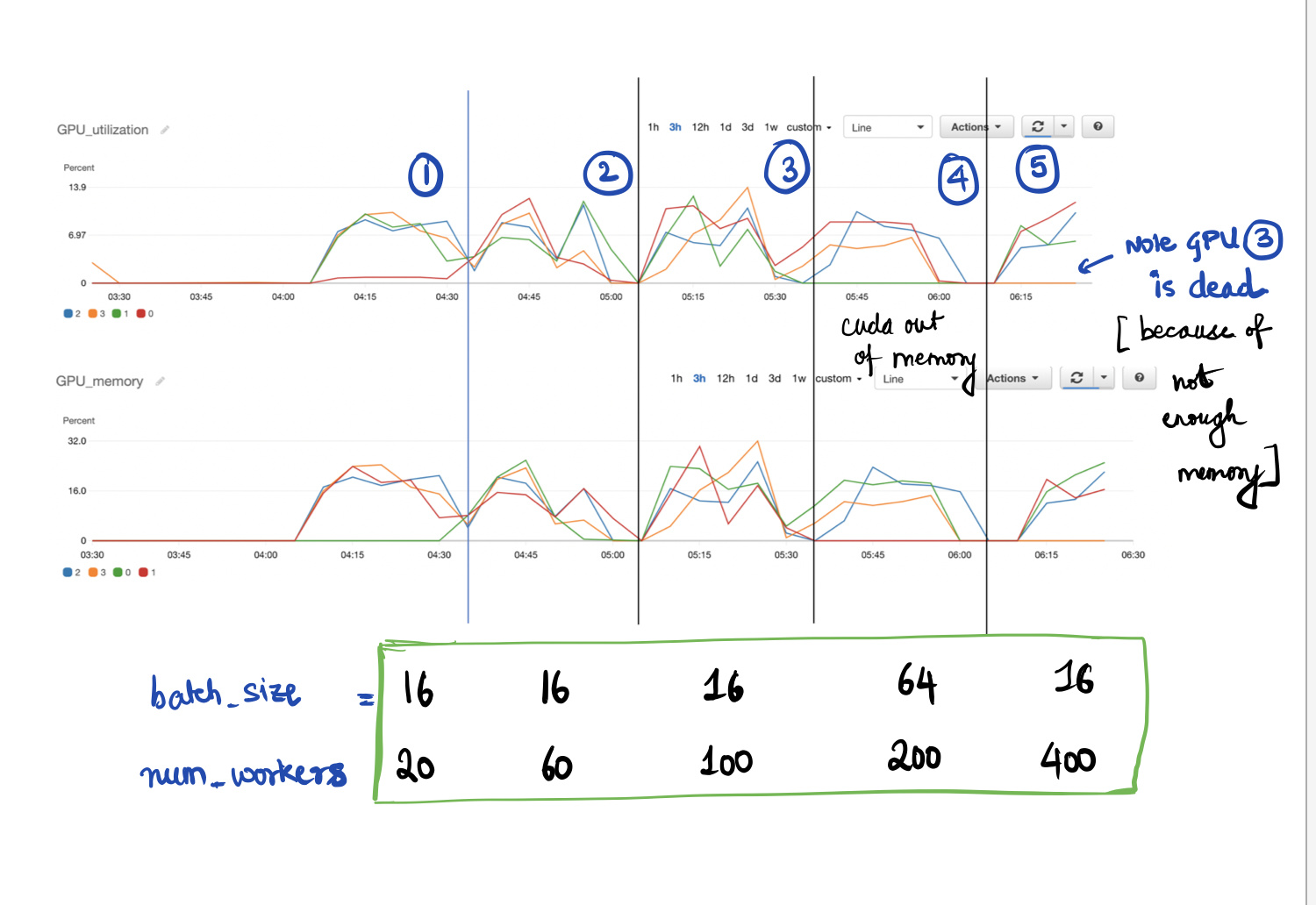

![Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub](https://user-images.githubusercontent.com/15141326/33256370-1618ac16-d352-11e7-83c1-cfdcfa19a9ee.png)

Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub

Understanding and Estimating GPU Memory Demands for Training LLMs in practice | by Max Shap | Medium

![Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub](https://user-images.githubusercontent.com/15141326/33256270-a3795912-d351-11e7-83e4-ea941ba95dd5.png)

Tuning] Results are GPU-number and batch-size dependent · Issue #444 · tensorflow/tensor2tensor · GitHub

Training a 1 Trillion Parameter Model With PyTorch Fully Sharded Data Parallel on AWS | by PyTorch | PyTorch | Medium